A PhD student at Ben-Gurion University named Ben Nassi has already used drones and inexpensive projectors to conduct spoofing attacks against a Mobileye driver assist system. Now, he’s doing it again with the Tesla Autopilot system.

These spoofing attacks rely on there being differences between human and AI image processing, where Nassi’s projected images would not fool a human but can somehow fool a Tesla.

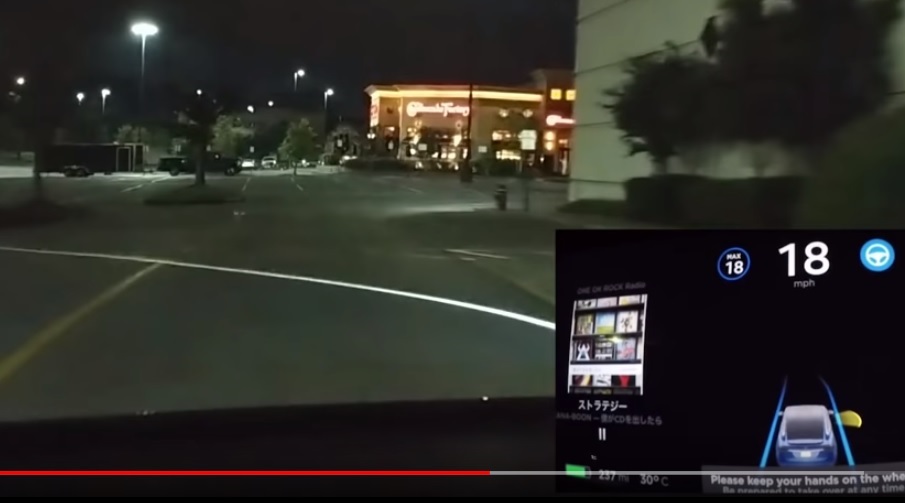

In the video above, Nassi outlines the dangers of these misdirects.

More from an Ars Technica report:

From a security perspective, the interesting angle here is that the attacker never has to be at the scene of the attack and doesn’t need to leave any evidence behind—and the attacker doesn’t need much technical expertise.

A teenager with a $400 drone and a battery-powered projector could reasonably pull this off with no more know-how than “hey, it’d be hilarious to troll cars down at the highway, right?”

The equipment doesn’t need to be expensive or fancy—Nassi’s team used several $200-$300 projectors successfully, one of which was rated for only 854×480 resolution and 100 lumens.

Tesla’s Autopilot is a Level 2 driver assistance system and therefore is not something that should drive itself without the supervision of an operator.

While an alert operator would be able to catch the errors caused by the tested attacks, another problem highlighted by this experiment is that many drivers don’t think they need to pay attention.

According to Ars Technica, a survey last year found that half of the polled drivers believe it is safe to take their hands off of the wheel of a Tesla Autopilot system. A few of them even said it was safe to take a nap.

Read Next: Walmart Sues Tesla After Roof Fires from Solar Panel Flaws